HA!

You thought I’d gone away. But no, it was just another busy couple of weeks. Got the chance to give an invited talk at the American Library Association (in DC) all about the book, and then I was a discussant for a wonderful paper about interruptions at the Human Computer Interaction Consortium conference at Pajaro Dunes (near Monterey, CA). Those were both a lot of work, but also inspiring and extraordinarily interesting.

But it put me behind schedule. So, here I am, back with you again to see what SRS we can do about how much DO people know about history, about math, or about geography?

The key question was this: how would you assess “our” level of knowledge in these three areas? What does the “public” really know?

Our Challenge: What DO we know, and how to we know what we know?

1. Can you find a high-quality (that is, very credible) study of how well the citizens of the United States (or your country, if you’re from somewhere else) understand (A) history, (B) geography, (C) mathematics?

As always, I’m always looking for new ways to answer questions like this. (That is, really difficult questions to search for.) It’s easy and short to ask this type of question, but what do you DO?

I realize that this is going to take a bit of explaining–so I’m going to break up my answer into 2 separate posts. This is Part 1: “How much do we understand about history?” I’ll do part 2 later this week.

As I started thinking about this, it became obvious that there are a couple of key questions that we need to list out. In the book I call these “Research Questions,” and that’s what they are. I recommend to searchers that they actually write these down–partly to help organize your notes, but also partly to make it VERY clear what you’re searching for! In essence, they help to frame your research.

A. “How much do we…?” Who is “we”? For my purposes, I’m going to limit “we” to currently living people in the US. We’ll touch on global understanding later, but for this round, just US. (Of course, if you live in another country, you should do your place!) I’m hoping we can find data to measure this across the range of ages, although it might be simpler to find just student data to begin with.

B. “.. know about history?” How are we going to measure this? Ideally, we’d give some kind of history test to everyone in the US–but that’s not going to happen. An important question for us is what will count as a proxy measurement of historical knowledge? (That is, what’s a good way to measure historical knowledge? What organization is giving the survey/test/exam?)

Also, another underspecified part of this question is “..about history?” Are we trying to measure World History, or just US History knowledge?

C. “How well…” What does it mean to measure “how well the citizens .. understand…”? All tests implicitly have a standard, an expectation that they’re measuring against. In this case, how should we measure “how well”? We’ll have to figure this out when we learn how “citizen history understanding” is gauged.

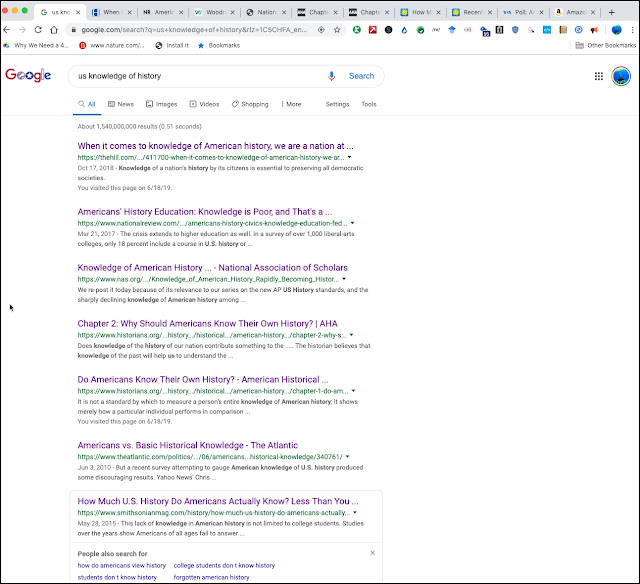

I started with the obvious query:

[ US knowledge of history ]

I wasn’t sure if this would work, but it gave some pretty interesting results, including a big hint that “American” is probably a useful search term:

|

| Figure 2. |

For this kind of topic (that is, one that I’m not sure where to begin) I opened a bunch of tabs in parallel. s: (on a Mac, you CMD+click on the link; on Windows it’s Ctrl+left-click)

This is called parallel browsing [1] [2] and is a great way to look at a topic across its range without getting stuck in one particular interpretation. When parallel searching, your goal is to see the spectrum of opinions on a topic. In particular, you’ll want to pay attention to how many different sources you’re seeing, and what sources you’re reading.

Note how I’ve opened all of the top 7 search results in parallel:

|

| Figure 3 |

Now, I can look at a bunch of these results and compare them. But, as always, you want to scan the result AND check for the organization (and author). For instance, in the above SERP there are results from TheHill.com, NationalReview.com, NAS.org, Historians.org, TheAtlantic.com, VOANews.com, and SmithsonianMag.com

Let’s do a quick rundown of these sources. The best way I know to do this is to (1) go to the organization’s home page and do a quick overview scan; (2) search for the name of the organization, looking for articles about the org from other sources (and points of view); (3) search for the name of the org along with the keyword “bias.” Here’s an example of what my screen looks like when I’m in mid-review, in this case, I’m checking out the American Historical Association (that is, Historians.org)…

|

| Figure 4. Click on this window to see it full size–that’s the only way you can read the text! |

In the bottom window you can see the AHA article about “Chapter 2: Why Should Americans Study History” (that’s link #4 in Figure 3). In the right window you can see my query: [ “American Historical Association” bias ] — this is a quick way to see if anyone else has written about possible biases in that org. In this case, the AHA org seems pretty kosher. There are articles about AHA that discuss their attempts to fight bias in various forms, but nobody seems to have written about their bias. (If you try this bias context term trick on the other orgs in this SERP, you’ll find very different results.)

An important SRS point to make: I open tabs in parallel as I’m exploring the main SERP, but I open a new window when I’m going to go in depth on a topic (and them open parallel tabs in there, rather than in the first window).

In the lower left window you’ll see the Wikipedia article about AHA. You can see that it’s been around for quite a while (chartered in 1884) as an association to promote historical studies, teaching, and preservation. The Wiki version of AHA is that it’s a scholarly org with an emphasis on collaboration as a way of doing history. That’s important, as it suggests that it’s a reasonably open organization.

Now.. back to our task of checking on the stance of each of these sources.

I’ll leave it to you to do all of the work, but here’s my summary of these sources:

TheHill.com – a political news newspaper/magazine that claims “nonpartisan reporting on the inner workings of Congress and the nexus of politics and business.” AllSides.com (a bias ranking org) finds it a bit conservative

NationalReview.com – shows up consistently as very conservative. (AllSides.com and Wikipedia agree.

NAS.org (National Association of Scholars) – pretty clearly “opposes multiculturalism and affirmative action and seeks to counter what it considers a “liberal bias” in academia.

Historians.org – (American Association of Historians) – multi-voice, collaborative institution of long standing that tries to represent an unbiased view of history.

TheAtlantic.com – news magazine with a slightly left-of-center bias.

VOANews.com (Voice of America) – is part of the U.S. Agency for Global Media (USAGM), the government agency that oversees all non-military, U.S. international broadcasting. Funded by the U.S. Congress.

SmithsonianMag.com – rated by Media Bias Fact Check as a “pro-science” magazine with a good reputation for accuracy.

NOW, with that background, what do we have in that first page of results?

TheHill.com reports that

“…Only one in three Americans is capable of passing the U.S. citizenship exam. That was the finding of a survey recently conducted by the Woodrow Wilson National Fellowship Foundation of a representative sample of 1,000 Americans. Respondents were asked 20 multiple choice questions on American history, all questions that are found on the publicly available practice exam for the U.S. Citizenship Test.”

Okay, now we have to go still deeper and do the same background check on the Woodrow Wilson National Fellowship Foundation. Using the method above, I found that it’s a nonprofit founded in 1945 for supporting leadership development in education. As such, they have a bit of an interest in finding that they’re needed–for instance, to help teach history and civics.

But the survey mentioned above was actually conducted by Lincoln Park Strategies, a well-known political survey company that’s fairly Democratic, but also writes extensively on the reliability of surveys. (So while I might tend to be a little skeptical, a survey about historical knowledge is likely to be accurate.)

The key result from this survey is that only 36% of those 1,000 citizens who were surveyed could pass the citizenship test. (See a sample US citizenship test and see if you could pass!) Among their findings, only 24 percent could correctly identify something that Benjamin Franklin was famous for, with 37 percent believing he invented the lightbulb.

Note that this survey implicitly answers Research Questions B and C (from above): How do we measure historical knowledge? Answer: By using the Citizenship Test. And, How well do people do on the test? Answer: A “good” grade would be passing, that is, the passing grade for a new citizen.

What about the other sources?

The National Review article reports on a 2016 American Council of Trustees and Alumni report that historical knowledge is terrible (“… less than a quarter of twelfth-grade students passed a basic examination [of history] at a ‘proficient’ level.”).

Now we have to ask again, who/what is the “American Council of Trustees and Alumni”? The short answer: part of a group of very conservative “think tanks” and non-profits that are closely linked to far-right groups (e.g., the Koch Brothers).

So, while that information could well be true, we realize that there’s an agenda at work here. (I did look at their survey method as reported in the report above, and it seems reasonable.)

Meanwhile, the National Association of Scholars points to the US Education Department’s National Assessment of Educational Progress quadrennial survey, The Nation’s Report Card: U.S. History 2010. Looking at the original report shows that the NAS article accurately reflects the underlying data. While average scores on the test have improved over the past several years, the absolute scores are terrible. As they write: “…20 per cent of fourth grade students, seventeen per cent of eighth graders, and twelve per cent of high school seniors performed well enough to be rated “proficient.” It looks even worse when you invert those positive figures: eighty per cent of fourth graders, eighty-three per cent of eighth graders and eighty-eight per cent of high school seniors flunked the minimum proficiency rating.”

Wow. That’s pretty astounding.

Continuing onward:

The Historians.org article (“Chapter 2: Why Should Americans Know Their Own History”) is an argument for teaching history, but has no data in it. However, Chapter 1 of the same text at the same site talks about the data, but the crucial figure is MISSING. (And I couldn’t find it.) So this doesn’t count for much of anything.

In that same vein, The Atlantic’s article “Americans vs. Basic Historical Knowledge” is really a reprint from another (now defunct) journal, “The Wire.” This article decries the state of American students with a bunch of terrifying examples, but it points to yet another set of data that’s missing-in-action.

The VOA article, “Poll: Americans’ Knowledge of Government, History in ‘Crisis’” is also ANOTHER reference to the American Council of Trustees and Alumni survey of 2016 (referred to as the data source for the National Review article). This article is basically a set of pull quotes from that report.

What about the pro-science magazine, Smithsonian? Their article, “How Much U.S. History Do Americans Actually Know? Less Than You Think” says that the 2014 National Assessment of Educational Progress (NAEP) report found that only 18 percent of 8th graders were proficient or above in U.S. History and only 23 percent in Civics. (See the full report here, or the highlights here.)

|

| Figure 5. NAEP history test scores for 8th graders, 1994 – 2014. |

Figure 5 shows an excerpt from the full report, and when I saw it I thought it looked awfully familiar.

Remember the National Association of Scholars article from a few paragraphs ago? Yeah, that one. Turns out that this article and that article both point to the same underlying data. That is, the National Assessment of Educational Progress (NAEP)! This article points to the updated 2014 report (while the earlier article’s data is from 2010). This doesn’t count as a really new data set, it’s just an update of what we saw earlier. What’s more, the update in four years isn’t statistically different. It doesn’t count as a separate reference!

Sigh.

So what we have here, in the final analysis of the 7 webs pages are:

a. the NEAP data set (from 2010 and 2014)

b. the American Council of Trustees data set (2016)

c. the Woodrow Wilson survey (which has a summary, but not much real data)

Everything else is either missing or a repeat.

I went through the next couple of SERP pages and while I found lots of articles, I found that almost all of them basically repeat the data from this handful of studies.

As it turns out, these three (and the few other studies I found that were about specific historical questions, rather than history broadly speaking) all agree: We’re not doing well. In particular, on normed tests, or the Citizenship test, Americans don’t seem to know much about their history.

Of course, this alarm has been raised every few years since at least 1917 when Carelton Bell and David McCollum tested 668 Texas high school students and found that one third of these teens knew that 1776 was the date of the Declaration of Independence. [3] Like that.

It’s a sobering thought do consider this on the July 4th holiday. (Which is, coincidentally, our agreed-upon celebration date of the signing–even though it took several days to actually sign the document, as the signatories were scattered across several states!) Like Bell and McCollum, I worry… but perhaps this is an inevitable worry. To me, it suggests that teaching and education need to remain permanent features of our intellectual landscape.

As should search.

Search on!

Search Lessons

There’s much to touch on here…

1. You have to go deep to look for redundancy. Just because we found 10 separate articles does NOT mean that there were 10 different studies that all agree. In this set of articles, there are really 3 common pieces of data.

2. Use parallel browsing to open up tabs that branch off the same SERP, and then use different windows to go deep on a particular topic. That’s what I do, and it certainly makes window management simpler!

3. Beware of lots of 404 errors (page not found). If a publication can’t keep references to their own pages up to date, you have to admit to being skeptical of their work overall. It’s inevitable to get some link-rot errors, but they shouldn’t be common, as they were in some sites I visited here. (Hint: If you want to write scholarly text that lasts, make sure you keep a copy of the data your article depends upon.)